Search engine resources are finite. For sites exceeding tens of thousands of URLs, inefficient resource allocation directly impedes visibility. The objective is to direct spiders toward high-value, revenue-generating, or strategic pages while minimizing time spent on redundant or low-priority assets. Prioritized resource allocation via intelligent internal link structure is not merely a technical exercise; it is a fundamental pillar of scalable SEO strategy, ensuring that critical content achieves rapid indexing and optimal ranking potential. This guide details the protocols necessary to implement a prioritized internal architecture.

Defining Internal Priority Signals and Crawl Allowance

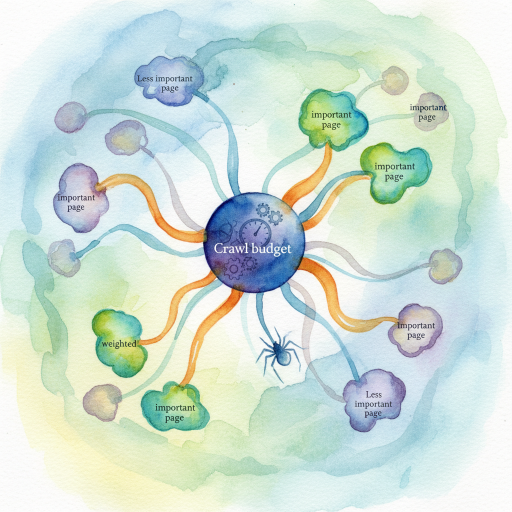

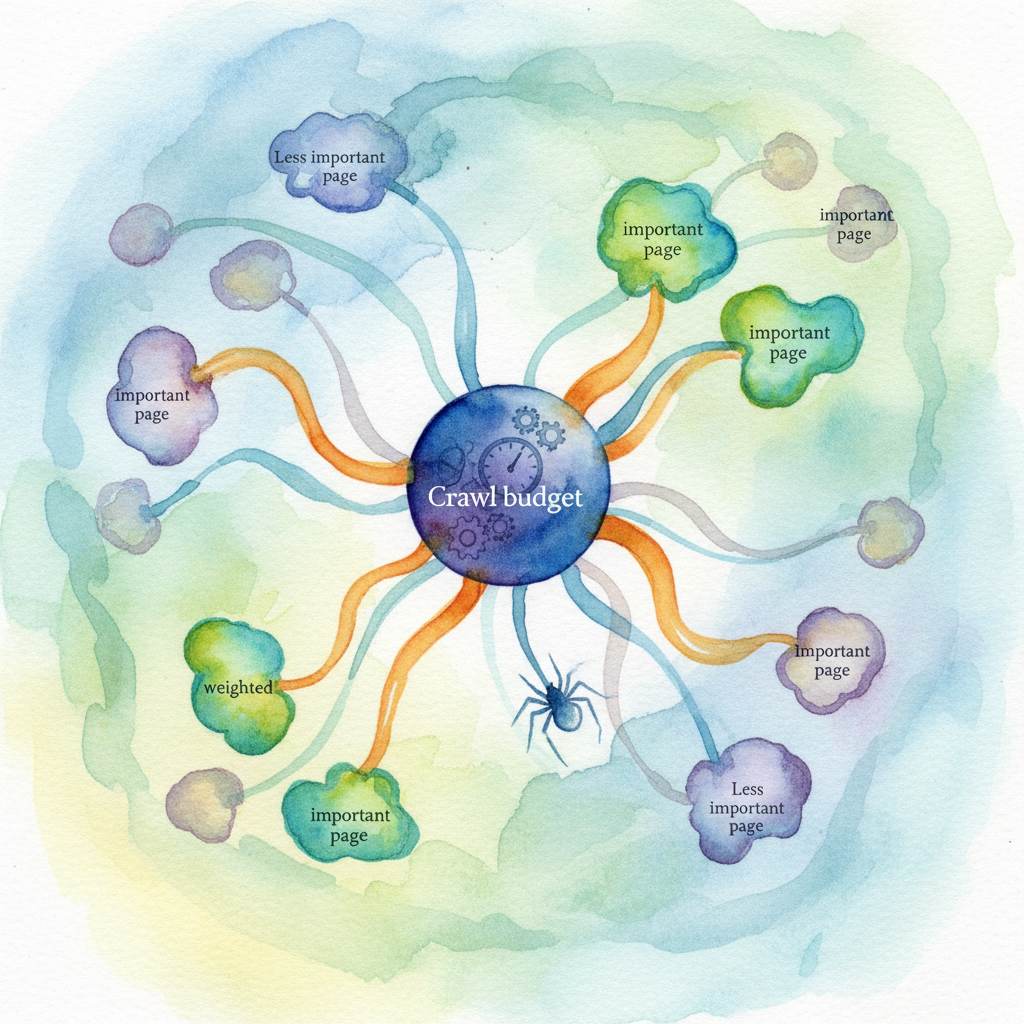

The site’s crawl allowance represents the total number of URLs a search engine bot (like Googlebot) is willing or able to crawl on a specific site within a given timeframe. This allowance is influenced by two primary factors: crawl rate limit (server capacity) and crawl demand (perceived freshness and authority).

Internal link authority distribution is the mechanism used to influence how this allowance is distributed internally. While the concept of strict PageRank sculpting is largely obsolete—as search engines often treat nofollow links as signals that still consume resources—the principle of directing internal priority signals remains critical. We must signal importance through strategic internal linking, URL structure, and rendering efficiency.

Crawl Signal Density (CSD)

We define Crawl Signal Density (CSD) as the cumulative value of internal links pointing to a specific URL, relative to the total number of indexable links on the site. High CSD pages receive more frequent visits, leading to faster discovery and re-indexing. Low CSD pages, conversely, should be deprioritized or consolidated.

Effective CSD management requires:

- Reducing noise: Eliminating unnecessary internal links from templates (e.g., date archives, redundant navigation elements).

- Concentrating equity: Ensuring high-authority pages (e.g., the homepage, major category hubs) link directly to the most critical pages requiring immediate indexing.

- Controlling depth: Minimizing the click depth (distance from the homepage) for priority content. Ideally, critical conversion pages should be 2–3 clicks deep.

Strategic Internal Linking Architecture for Maximum Efficiency

A robust internal linking structure acts as a roadmap for both users and bots. When architecting this structure, focus on distributing authority in a manner that aligns with business value, not just structural convenience.

Identifying Indexable vs. Non-Indexable Assets

The first step in efficient resource management is distinguishing between assets that must be indexed and those that should be excluded. Non-indexable assets consume the allowance without providing ranking value.

| Asset Type | Indexing Goal | Link Weighting Protocol | Crawl Allocation Impact |

|---|---|---|---|

| High-Value Product/Service Pages | Must Index (High Priority) | Maximum internal links, high CSD score, minimal click depth. | High allocation; frequent recrawl. |

| Category/Hub Pages | Must Index (Authority Distribution) | Strong internal linking from homepage/sitemap, links to all sub-pages. | High allocation; acts as a crawl funnel. |

| Filtered Search Results/Sorting Pages | Do Not Index (Low Priority) | Use noindex, follow or canonicalization to primary page. |

Minimal; focus on canonical signals. |

| Paginating Archives (Page 2+) | Deprioritize Crawl | Use rel="next/prev" (though deprecated, still useful for context) or strict canonicalization to Page 1. |

Reduced allocation; minimize unnecessary deep crawling. |

| User Profile/Login Pages | Block Crawl | Disallow via robots.txt or use noindex, nofollow. |

Zero allocation; essential for resource conservation. |

The Role of Anchor Text in Weight Distribution

Anchor text is not merely descriptive; it confirms the topical relevance of the destination URL, reinforcing the link’s value. Generic anchors (e.g., «click here,» «read more») dilute the signal.

- Precision: Use exact match or closely related phrases for high-priority pages.

- Diversity: Avoid over-optimization; vary the anchor text slightly to reflect related long-tail queries.

- Context: Ensure the linking text is contextually relevant within the surrounding paragraph, affirming the link’s authority.

Practical Steps for Implementing Prioritized Resource Allocation

Successful implementation of prioritized resource allocation requires a cyclical process of auditing, pruning, and reinforcing link signals.

1. Conduct a Crawl Audit and Log Analysis

Analyze server logs to determine how Googlebot interacts with the site. Identify pages that are frequently crawled but rarely change (wasting resources) and pages that change often but are rarely crawled (missing indexing opportunities).

- Identify High-Frequency, Low-Value Crawls: These URLs are prime candidates for consolidation,

noindextags, or being blocked viarobots.txt. - Analyze Response Codes: Identify excessive 4xx (broken links) and 5xx (server errors). Every failed crawl attempt is wasted processing allowance. Fix these immediately.

2. Prune Low-Value Internal Links

Review site templates and navigation elements. If a link appears on every page but points to a low-value asset (e.g., a lengthy, outdated privacy policy archive), remove the link from the template or apply a nofollow attribute if removal is impossible.

Example: Pruning Template Links If a site has 100,000 pages, a link in the footer to an «About Our History» page generates 100,000 internal links. If this page has low strategic value, that link equity is better redirected to a key service page.

3. Implement the Priority Signaling Protocol

Prioritize the distribution of link equity based on the Page Authority (P-A-R) ratio (Priority-Authority-Relevance).

- Identify P1 Assets: Pages critical for revenue (e.g., conversion funnels, primary category pages). These must receive the highest CSD score.

- Reinforce P1 Links: Ensure P1 assets are linked directly from the homepage and high-authority hub pages, using descriptive anchor text.

- Deprioritize P3 Assets: Pages that are necessary but not for ranking (e.g., old press releases, archived comments). Use methods like reducing link count, increasing click depth, or applying

noindex, follow.

Key Takeaway: Internal priority signaling is a resource allocation mechanism. By deliberately concentrating internal authority signals on high-priority URLs, site owners can force a more efficient utilization of the allocated crawl allowance, accelerating the discovery and ranking of critical assets.

Continuous Auditing

Use tools like Google Search Console’s Coverage report and URL Inspection tool to verify that the implemented weighting protocols result in the desired crawl behavior. Look for improvements in the «Crawl Stats» report, specifically noting a shift in crawling activity toward your P1 assets.

Common Misconceptions Regarding Indexing Signals

Understanding how search engines interpret signals is crucial for advanced SEO strategy. Misapplication of directives can inadvertently waste crawl allowance.

Is using nofollow enough to save crawl allowance?

No. While nofollow prevents the flow of link equity, Google often still queues the linked URL for potential crawling, especially if it finds other signals pointing to that URL. To truly save resources, use robots.txt disallow directives or noindex, follow tags on the page itself.

Does increasing server speed automatically increase the overall allocation? Faster server response times (low latency) increase the crawl rate limit because the bot can process more requests without overloading the host. However, the crawl demand (the perceived need to crawl the site) must also be high for the allowance to increase significantly.

Should I use robots.txt to block all low-value pages?

Only block pages that you absolutely do not want Google to crawl or discover. If a page is blocked via robots.txt but linked internally, Google may still index the URL without crawling the content, leading to a «No information is available for this page» snippet. Use noindex for pages you want Google to know about but not index.

Does redirecting old URLs save indexing resources? Yes, but only if implemented correctly. Excessive redirect chains (301 > 302 > 301) waste the allowance by forcing the bot to follow multiple hops. Ensure all redirects are direct (one hop) and permanent (301) when consolidating content.

How does site structure depth affect indexing speed? Content buried deep (5+ clicks from the homepage) receives significantly less link equity and is crawled less frequently. Shallow, logical structures (2–3 clicks) ensure faster discovery and higher CSD scores, directly benefiting indexing speed.

Are XML Sitemaps a substitute for strong internal linking? Sitemaps are a suggestion, not a command. They help bots discover pages they might miss, but they do not distribute link equity or authority. Strong internal linking remains the primary method for signaling importance and distributing weight.

If I use JavaScript for internal links, does it impact link value distribution? Modern search engines render JavaScript, but rendering requires resources and time. Links rendered late or conditionally may receive less weight initially. Prioritize static HTML links for critical P1 assets to guarantee immediate signal transmission.

Implementing a Data-Driven Priority Signaling Protocol

Effective priority signaling is an ongoing optimization task that requires precise execution and continuous validation against crawl data.

1. Standardize Link Allocation

Establish a clear protocol for how many internal links each type of page receives and where those links originate.

- Header/Footer Links: Reserve these high-visibility slots only for the absolute highest-priority category or service hubs.

- Contextual Links: Mandate that all new content includes 3–5 highly relevant contextual links to P1 or P2 assets.

- Deprioritization: Use URL parameters or specific path structures (e.g.,

/archive/) to group low-priority content, making it easier to manage crawl directives viarobots.txtor canonical tags.

2. Monitor and Adjust Crawl Rate

Use the «Crawl Stats» report in Google Search Console to monitor the average time spent downloading a page and the total number of pages crawled per day. If the average download time increases, it signals server stress or inefficient rendering, demanding immediate attention to render-blocking resources or server performance.

3. Calculating Link Value Decay

Understand that the value of a link decays based on its distance from high-authority sources. Implement a system where P1 pages are never more than two clicks away from the homepage.

Action Example: Internal Priority Audit

- Map Current Depth: Use a site crawler to identify all URLs 4+ clicks deep.

- Assess Value: Determine if these deep URLs are P1 assets (they shouldn’t be).

- Reroute Equity: If a P1 asset is found deep, restructure the internal linking to place a direct link to it from a high-authority hub page (e.g., a primary category page), reducing its click depth to 2 or 3. This immediate re-allocation of link weight signals higher priority to the crawling mechanism.

Prioritized Indexing: A Technical Guide to Efficient Resource Allocation